Tech Titans Defy the Fed: How the AI Boom Is Powering Growth Beyond Powell’s Reach

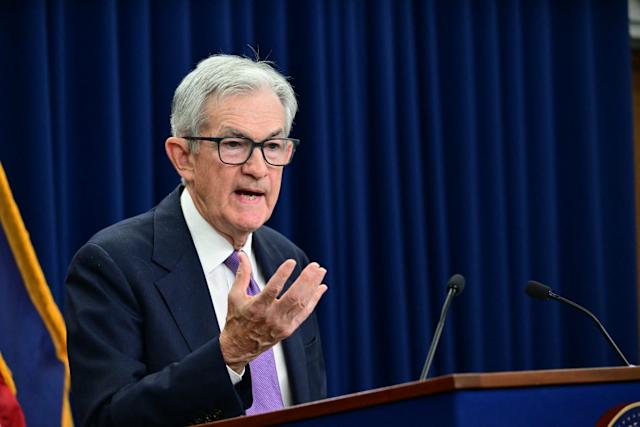

Federal Reserve Chair Jerome Powell has spent months warning that interest rates would remain higher for longer — a clear message to cool an economy still running hotter than expected. But as Wall Street holds its breath for every Fed signal, the world’s biggest technology companies seem to be operating on an entirely different plane.

The latest earnings season made one thing clear: the AI-driven tech economy is decoupling from traditional monetary policy. Giants like Nvidia, Microsoft, Amazon, and Alphabet have posted record revenues and investment growth, seemingly immune to higher borrowing costs.

Powell himself hinted earlier this year that the AI sector’s runaway momentum might not be constrained by interest rate policy. “Monetary tightening can influence demand, but some sectors are being propelled by structural innovation,” he said. In other words, tech doesn’t care about the Fed — and now it’s proving him right.

The Great Disconnect

For decades, the Fed’s interest rate decisions have been the dominant force shaping corporate behavior. Higher rates usually mean less borrowing, slower growth, and reduced risk appetite. But the post-pandemic economy — and particularly the AI boom — has rewritten that rulebook.

The companies leading the charge into artificial intelligence are not capital-constrained startups relying on cheap credit. They are cash-rich megacorporations sitting on hundreds of billions in reserves. Microsoft alone holds over $80 billion in cash and equivalents; Alphabet and Apple each have more than $100 billion.

When Powell raises rates, smaller firms might tighten their belts — but the Big Tech elite simply redirect their war chests toward AI infrastructure, data centers, and acquisitions. “They’re operating in a different financial universe,” said one investment strategist. “The Fed can’t slow down innovation that’s being fueled by internal capital and global demand.”

The AI Economy Has Its Own Gravity

The explosion in AI investments has created what economists are calling a parallel economy — one that operates largely beyond the reach of traditional monetary levers.

Nvidia’s chips have become the foundation of global AI development, powering everything from corporate data centers to autonomous vehicles and generative language models. The company’s revenue has soared more than 200% in two years, pushing its valuation beyond $3 trillion — levels once reserved for oil giants and industrial titans.

At the same time, Microsoft’s partnership with OpenAI, Amazon’s rapid buildout of AI cloud services, and Google’s deep learning innovations have triggered a global race among corporations to adopt AI at scale. This corporate demand — not consumer credit or retail spending — is now a primary driver of U.S. GDP growth.

In recent quarters, AI-related capital expenditure from just five major tech firms accounted for nearly half of all U.S. business investment growth. That’s an unprecedented concentration of economic influence — and one that the Fed can’t meaningfully moderate through rate policy.

Innovation, Not Interest, Is Driving Growth

The underlying issue is structural. The Fed’s primary tool — the federal funds rate — works by influencing the cost of money. It affects mortgages, loans, and investments that depend on credit. But AI, cloud computing, and semiconductors are being funded by massive cash flows, not debt.

“The tech sector’s growth isn’t leveraged — it’s liquid,” said one economist. “They’re not borrowing to build data centers; they’re paying in cash.”

That makes them largely immune to the dampening effects of higher interest rates. As a result, while sectors like real estate and small business lending slow down, AI infrastructure spending continues to soar.

The result is a two-speed economy: one driven by cash-heavy innovation, and the other still vulnerable to traditional monetary tightening. It’s a structural divide that may redefine how policymakers think about inflation and growth in the years to come.

The Fed’s Dilemma

For Powell, this dynamic presents a challenge. The central bank’s goal is to cool inflation without stalling growth — but when the engine of growth comes from an innovation sector largely unaffected by rate hikes, the old playbook no longer applies.

AI investment is driving up wages in specialized fields, pushing demand for high-end components, and fueling a wave of corporate spending that’s difficult to temper. Even as other parts of the economy — like manufacturing and housing — respond predictably to monetary tightening, tech remains overheated.

“It’s like pressing the brakes on one part of the car while the engine in another keeps revving,” one analyst noted. “The Fed can slow credit markets, but it can’t slow technological acceleration.”

This divergence has led some economists to argue that the AI economy is becoming the new industrial revolution — a productivity surge so powerful that it reshapes macroeconomic relationships altogether. In that world, inflation, labor markets, and capital formation may follow entirely new dynamics, largely outside the Fed’s traditional sphere of control.

A Cultural Shift in Corporate Behavior

It’s not just about money — it’s about mindset. The tech elite see innovation as inevitable, not optional. Powell’s rate increases may raise costs across the economy, but for companies like Microsoft, Meta, and Amazon, AI is a strategic necessity, not a discretionary investment.

They’re not waiting for cheaper credit — they’re racing to secure dominance in what they see as the defining technological frontier of the century.

Executives from several major firms have echoed that sentiment. Microsoft CEO Satya Nadella recently described AI investment as “existential,” not cyclical. Amazon’s Andy Jassy said the company would “continue to invest heavily in AI infrastructure regardless of the rate environment.”

That attitude — a belief that innovation must continue at any cost — further insulates Big Tech from monetary policy. It’s not that they’re ignoring the Fed; it’s that they’re operating according to different incentives.

A New Economic Power Structure

This shift has profound implications for economic governance. Historically, central banks were the most influential actors in managing growth cycles. But as capital pools concentrate in private hands — and as tech firms become quasi-sovereign entities with their own ecosystems of capital, labor, and infrastructure — the balance of macroeconomic power is tilting.

When Powell moves rates by 25 basis points, smaller firms react immediately. But when Microsoft or Nvidia announces a $10 billion AI data center investment, markets respond more dramatically. The center of gravity has shifted from Washington to Silicon Valley and Seattle.

“This is not just a new business cycle,” said a financial historian. “It’s a new form of economic sovereignty — where corporate innovation policy can override national monetary policy.”

The Future Beyond the Fed

The resilience of tech giants amid higher rates poses both promise and peril. On one hand, it suggests that the U.S. economy is innovating its way past stagnation — that productivity, not credit, is driving expansion. On the other, it raises concerns about inequality, concentration of power, and the Fed’s diminishing ability to guide macroeconomic outcomes.

Powell’s acknowledgment of this new reality was strikingly candid. “Some parts of the economy are beyond our reach,” he said during a recent address. “Our tools are powerful but not universal.”

That humility may mark a turning point in how policymakers view the economy — not as a single, rate-sensitive system, but as a hybrid landscape of capital-rich innovation and credit-dependent consumption.

Conclusion: A New Era of Economic Autonomy

As AI becomes the defining engine of global growth, tech giants are effectively writing their own rules — and perhaps their own monetary policy. Their balance sheets insulate them from interest rate pressure; their innovation pipelines drive global demand; their scale transcends traditional regulatory reach.

In that context, Powell’s observation rings prophetic. The Fed may still steer the broader economy, but the forces shaping the future — data, algorithms, and artificial intelligence — now lie beyond its grasp.